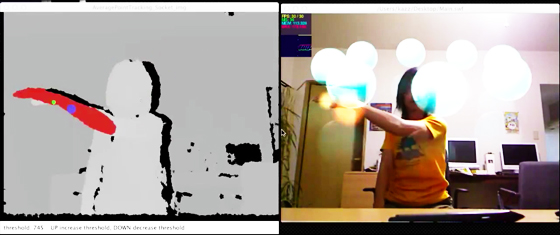

OpenKinect (Processing) + Flash でもう少し遊んでみた

Processing 版の OpenKinect が超簡単でおもしろいので、ソケットで Flash と連携させてもう少し遊んでみました。(明日からはまじめに働きます)

前回は簡易的な座標のトラッキングだけを試してみましたが、今回はそれをもう少し発展させて、前回の簡易座標に加えて、Kinekt からの RGB Video(正確には毎フレーム取得する画像ですが)も Flash 側に取り込んで、ジェスチャーで操作できる「なんとなくAR風に見える 3Dメニュー」を作ってみました。

ということで、下の映像がなんとなくAR風に見える 3Dメニューをなんとなく操作しているデモ映像です。

今回使用した Processing のコードはこちら。(起動時にエラーが出ますがよく分からないのとりあえず放置)

import org.openkinect.*;

import org.openkinect.processing.*;

import processing.net.*;

import java.awt.image.*;

import java.awt.*;

import javax.imageio.*;

import java.net.DatagramPacket;

// Showing how we can farm all the kinect stuff out to a separate class

KinectTracker tracker;

// Kinect Library object

Kinect kinect;

//

Server imgServer;

Server pointServer ;

Client imgClient ;

Client pointClient;

boolean connected ;

String rcvString;

byte[] sendBytes;

InetSocketAddress remoteAddress;

DatagramPacket sendPacket;

PImage img;

void setup()

{

size(640,510);

frameRate(30);

kinect = new Kinect(this);

tracker = new KinectTracker();

//

imgServer = new Server(this, 5204);

pointServer = new Server(this, 6204);

//remoteAddress = new InetSocketAddress("localhost", 5204);

image(kinect.getVideoImage(),0,0);

}

void draw()

{

background(255);

// Run the tracking analysis

tracker.track();

// Show the image

tracker.display();

// Let's draw the raw location

PVector v1 = tracker.getPos();

fill(50,100,250,200);

noStroke();

ellipse(v1.x,v1.y,20,20);

// Let's draw the "lerped" location

PVector v2 = tracker.getLerpedPos();

fill(100,250,50,200);

noStroke();

ellipse(v2.x,v2.y,10,10);

// Display some info

int t = tracker.getThreshold();

fill(0);

text("threshold: " + t + " " + "UP increase threshold, DOWN decrease threshold", 8, 500);

//

if (imgClient == null) return;

//

img = kinect.getVideoImage();

updateImgSocket(img);//tracker.display);

updatePointSocket(int(v1.x), int(v1.y), int(v2.x), int(v2.y));

}

void keyPressed()

{

int t = tracker.getThreshold();

if (key == CODED)

{

if (keyCode == UP)

{

t+=5;

tracker.setThreshold(t);

}

else if (keyCode == DOWN)

{

t-=5;

tracker.setThreshold(t);

}

}

}

void stop()

{

tracker.quit();

super.stop();

}

// ----- Socket Sender ------

void updateImgSocket(PImage img)

{

BufferedImage bfImage = (BufferedImage)(img.getImage());

ByteArrayOutputStream bos = new ByteArrayOutputStream();

BufferedOutputStream os = new BufferedOutputStream(bos);

try

{

bfImage.flush();

ImageIO.write(bfImage, "jpg", os);

os.flush();

os.close();

}

catch(IOException e)

{

println("Error");

}

sendBytes = bos.toByteArray();

imgServer.write(sendBytes);

}

void updatePointSocket(int px1, int py1, int px2, int py2)

{

String str = px1 + "," + py1 + "\n" + px2 + "," + py2;

pointServer.write(str);

}

void serverEvent(Server srv, Client clt)

{

println("connected") ;

imgClient = clt ;

pointClient = clt ;

}

今回は Processing 側から「毎フレーム毎のRGB画像」と「現在のXY座標」と「ちょっと前のXY座標」を送信する必要があったのですが、一つのソケットで送る方法がわからなかったので、画像用と座標用として2つのソケットを立ち上げました。

画像用のソケットでは、Kinect から取得した RGB 画像(PImage)をバイト配列にしてソケットで送信しています。

void updateImgSocket(PImage img)

{

BufferedImage bfImage = (BufferedImage)(img.getImage());

ByteArrayOutputStream bos = new ByteArrayOutputStream();

BufferedOutputStream os = new BufferedOutputStream(bos);

try

{

bfImage.flush();

ImageIO.write(bfImage, "jpg", os);

os.flush();

os.close();

}

catch(IOException e)

{

println("Error");

}

sendBytes = bos.toByteArray();

imgServer.write(sendBytes);

}

座標データも文字列に変換後 Server.write で送信しています。

void updatePointSocket(int px1, int py1, int px2, int py2)

{

String str = px1 + "," + py1 + "\n" + px2 + "," + py2;

pointServer.write(str);

}

次に Flash 側ですが、今回の全コードは以下になります。

package

{

import a24.tween.*;

import com.bit101.components.*;

import flash.display.Bitmap;

import flash.display.BitmapData;

import flash.display.BlendMode;

import flash.display.DisplayObject;

import flash.display.Loader;

import flash.display.Sprite;

import flash.errors.IOError;

import flash.events.Event;

import flash.events.IOErrorEvent;

import flash.events.MouseEvent;

import flash.events.ProgressEvent;

import flash.filters.BlurFilter;

import flash.geom.Point;

import flash.geom.Rectangle;

import flash.geom.Vector3D;

import flash.net.Socket;

import flash.text.TextField;

import flash.utils.ByteArray;

import net.hires.debug.Stats;

[SWF(width = "640", height = "480", backgroundColor = "0", frameRate = "30")]

public class Main extends Sprite

{

private var _imgsock:Socket;

private var _pointSok:Socket;

public var sp:Sprite = new Sprite();

public var sp2:Sprite = new Sprite();

private var _bm:Bitmap = new Bitmap();

private var _bmd:BitmapData = new BitmapData(640, 480, false, 0xFFFFFF);

private var _rect:Rectangle = new Rectangle(0, 0, 640, 480);

//

private var _container:Sprite = new Sprite();

private var _items:Vector.<Sprite>;

private var _radius:Number = 200;

private var _numItems:int = 8;

private var _chageAngle:Number = 360 / _numItems;

private var _v:Number = 0;

private var _isRotat:Boolean;

[Embed(source = 'img/img.png')]

private const Img:Class;

public function Main()

{

root.transform.perspectiveProjection.fieldOfView = 54;

root.transform.perspectiveProjection.projectionCenter = new Point( 640 * .5, 0 );

_bm.bitmapData = _bmd;

_bm.scaleX = -1;

_bm.x = stage.stageWidth;

addChild(_bm);

sp.graphics.beginFill( 0xFF6633 );

sp.graphics.drawCircle( 0, 0, 30);

sp.filters = [ new BlurFilter(16, 16, 2) ];

sp.blendMode = BlendMode.ADD;

addChild( sp );

sp2.graphics.beginFill( 0xFFAA66 );

sp2.graphics.drawCircle( 0, 0, 15);

sp2.filters = [ new BlurFilter(16, 16) ];

sp2.blendMode = BlendMode.ADD;

addChild( sp2 );

setup();

var stats:Stats = new Stats();

addChild(stats);

}

private function setup():void

{

_imgsock = new Socket();

_imgsock.addEventListener(IOErrorEvent.IO_ERROR, connectError);

_imgsock.addEventListener(ProgressEvent.SOCKET_DATA, receiveImage);

_imgsock.connect("localhost", 5204);

_imgsock.addEventListener( Event.CLOSE, function(event:Event):void { trace("closed: soc1"); });

_pointSok = new Socket();

_pointSok.addEventListener(IOErrorEvent.IO_ERROR, connectError);

_pointSok.addEventListener(ProgressEvent.SOCKET_DATA, receivePoint);

_pointSok.connect("localhost", 6204);

_pointSok.addEventListener( Event.CLOSE, function(event:Event):void { trace("closed: soc2"); });

function connectError(e:IOErrorEvent):void

{

trace( e );

}

//

_container.x = stage.stageWidth * .5;

_container.y = stage.stageHeight * .25;

addChild(_container);

_items = Vector.<Sprite>([]);

for ( var i:int = 0; i < _numItems; i++ )

{

var bm:Bitmap = new Img();

var img:BitmapData = bm.bitmapData;

var angle:Number = Math.PI * 2 / _numItems * i;

var item:Item = new Item( 120, 120, 0, img );

_container.addChild( item );

item.x = Math.cos( angle ) * _radius;

item.z = Math.sin( angle ) * _radius;

_items.push( item );

item.blendMode = BlendMode.ADD;

}

}

// ------------- Socket Receiver ---------------------------------------------------- /

private function receivePoint(e:ProgressEvent):void

{

var str:String = _pointSok.readMultiByte(_pointSok.bytesAvailable, "utf-8");

if(!str || str == "") return;

var list:Array = str.split("\n");

var nowPos:Array = list[0].split(",");

var pastPos:Array = list[1].split(",");

if(nowPos[0] >= 0 && nowPos[1] >= 0)

{

sp.x = nowPos[0];

sp.y = nowPos[1];

//

sp2.x = pastPos[0];

sp2.y = pastPos[1];

}

if( _isRotat ) return;

if( Math.abs( sp.x - sp2.x ) > 50 && Math.abs( sp.x - stage.stageWidth * 2 ) > 100 )

{

var dist:int = sp.x - sp2.x;

var r:Number;

if( dist < 0 )

{

if( dist < -90 )

{

r = _container.rotationY + _chageAngle * 4;

}

else if( dist < -70 && dist >= -90)

{

r = _container.rotationY + _chageAngle * 2;

}

else

{

r = _container.rotationY + _chageAngle;

}

}

else

{

if( dist > 90 )

{

r = _container.rotationY - _chageAngle * 4;

}

else if( dist > 70 && dist <= 90)

{

r = _container.rotationY - _chageAngle * 2;

}

else

{

r = _container.rotationY - _chageAngle;

}

}

_isRotat = true;

addEventListener( Event.ENTER_FRAME, update );

Tween24.serial(

Tween24.tween( _container, .5, Ease24._2_QuadOut ).rotationY( r ),

Tween24.wait( .1 ),

Tween24.func( function():void{

_isRotat = false;

removeEventListener( Event.ENTER_FRAME, update );

})

).play();

}

}

private function receiveImage(e:ProgressEvent):void

{

var ba:ByteArray = new ByteArray();

_imgsock.readBytes(ba, 0, _imgsock.bytesAvailable);

if( ba.bytesAvailable < 10000 ) return;

var loader:Loader = new Loader();

loader.loadBytes( ba );

loader.contentLoaderInfo.addEventListener( Event.COMPLETE, onComplete);

loader.contentLoaderInfo.addEventListener( IOErrorEvent.IO_ERROR, onIOError );

function onComplete(e:Event):void

{

loader.contentLoaderInfo.removeEventListener( Event.COMPLETE, onComplete);

loader.contentLoaderInfo.removeEventListener( IOErrorEvent.IO_ERROR, onIOError );

var bm:Bitmap = loader.content as Bitmap;

_bmd = bm.bitmapData;

_bm.bitmapData = _bmd;

}

function onIOError(E:IOErrorEvent):void

{

loader.contentLoaderInfo.removeEventListener( Event.COMPLETE, onComplete);

loader.contentLoaderInfo.removeEventListener( IOErrorEvent.IO_ERROR, onIOError );

}

}

// -------------------- Items Depth Sort -------------------------------------------------- /

private function sortItems():void

{

_items.sort( depthSort );

for ( var i:int = 0; i < _items.length; i++ )

{

_container.addChildAt( _items[ i ] as Sprite, i );

}

}

private function depthSort( objA:DisplayObject, objB:DisplayObject ):int

{

var posA:Vector3D = objA.transform.matrix3D.position;

posA = _container.transform.matrix3D.deltaTransformVector( posA );

var posB:Vector3D = objB.transform.matrix3D.position;

posB = _container.transform.matrix3D.deltaTransformVector( posB );

return posB.z - posA.z;

}

// -------------------- Update -------------------------------------------------- /

private function update( e:Event ):void

{

sortItems();

for ( var i:int = 0; i < _items.length; i++ )

{

_items[ i ].rotationY = -_container.rotationY;

if(i == _items.length - 1)

{

_items[ i ].alpha = 1;

}

else if(i < _items.length - 1 && i >= _items.length - 3)

{

_items[ i ].alpha = .9;

}

else if(i < _items.length - 3 && i >= _items.length - 5)

{

_items[ i ].alpha = .8;

}

else

{

_items[ i ].alpha = .85;

}

}

}

}

}

// -------------------- MenuItem -------------------------------------------------- /

import flash.display.Bitmap;

import flash.display.BitmapData;

import flash.display.Sprite;

import flash.filters.BlurFilter;

import flash.geom.ColorTransform;

import flash.geom.Matrix;

import flash.geom.Point;

import flash.text.TextField;

internal class Item extends Sprite

{

private var _bmd:BitmapData;

private var _image:BitmapData;

private var _mask:GradietionMask;

public function Item( w:int, h:int, color:int, img:BitmapData )

{

_image = img;

var image:Bitmap = new Bitmap( img );

image.x = -image.width * .5;

image.y = -image.height * .5;

addChild( image );

this.cacheAsBitmap = true;

mirror( w, h, -w * .5, h * .5 )

}

private function mirror( w:int, h:int, offsetX:Number, offsetY:Number ):void

{

var bmd:BitmapData = _image.clone();

bmd.colorTransform( bmd.rect, new ColorTransform( 1, 1, 1, .5 ));

var bm:Bitmap = new Bitmap( bmd );

var refrect:Sprite = new Sprite;

refrect.cacheAsBitmap = true;

refrect.scaleX = refrect.scaleY = -1;

refrect.x = offsetX + bm.width;

refrect.y = offsetY + bm.height;

addChild( refrect );

refrect.addChildAt( bm, 0 );

var gradietionMask:Sprite = new GradietionMask( w, bm.height );

gradietionMask.rotation = 90

gradietionMask.x = w * .5;

gradietionMask.y = offsetY;

gradietionMask.cacheAsBitmap = true;

addChild( gradietionMask );

refrect.mask = gradietionMask;

}

}

import flash.display.Sprite;

import flash.display.Graphics;

internal class GradietionMask extends Sprite

{

public function GradietionMask( w:int, h:int )

{

var g:Graphics = this.graphics;

g.beginGradientFill( "linear", [ 0xFFFFFF, 0xFFFFFF ], [ 1, .01 ], [ 0, 230 ]);

g.drawRect( 0, 0, 120, 120 );

g.endFill();

}

}

Flash 側での画像受信は、受信したバイト配列を Loader.loadBytes で Bitmap に戻して、その Bitmap の BitmapData を取り出して使用しています。

private function receiveImage(e:ProgressEvent):void

{

var ba:ByteArray = new ByteArray();

_imgsock.readBytes(ba, 0, _imgsock.bytesAvailable);

if( ba.bytesAvailable < 10000 ) return;

var loader:Loader = new Loader();

loader.loadBytes( ba );

loader.contentLoaderInfo.addEventListener( Event.COMPLETE, onComplete);

loader.contentLoaderInfo.addEventListener( IOErrorEvent.IO_ERROR, onIOError );

function onComplete(e:Event):void

{

loader.contentLoaderInfo.removeEventListener( Event.COMPLETE, onComplete);

loader.contentLoaderInfo.removeEventListener( IOErrorEvent.IO_ERROR, onIOError );

var bm:Bitmap = loader.content as Bitmap;

_bmd = bm.bitmapData;

_bm.bitmapData = _bmd;

}

function onIOError(E:IOErrorEvent):void

{

loader.contentLoaderInfo.removeEventListener( Event.COMPLETE, onComplete);

loader.contentLoaderInfo.removeEventListener( IOErrorEvent.IO_ERROR, onIOError );

}

}

また座標情報部分は、受信したバイト配列を文字列にした後、文字列をパースして現在の座標とちょっと前の座標それぞれの配列に変換して使用しています。

そして、取得した現在の座標とちょっと前の座標を使った超簡単な計算をして、その結果によって3D風メニューの回転角度(rotationY)を調整し、Tweenでメニューを回転させています。

private function receivePoint(e:ProgressEvent):void

{

var str:String = _pointSok.readMultiByte(_pointSok.bytesAvailable, "utf-8");

if(!str || str == "") return;

var list:Array = str.split("\n");

var nowPos:Array = list[0].split(",");

var pastPos:Array = list[1].split(",");

if(nowPos[0] >= 0 && nowPos[1] >= 0)

{

sp.x = nowPos[0];

sp.y = nowPos[1];

//

sp2.x = pastPos[0];

sp2.y = pastPos[1];

}

if( _isRotat ) return;

if( Math.abs( sp.x - sp2.x ) > 50 && Math.abs( sp.x - stage.stageWidth * 2 ) > 100 )

{

var dist:int = sp.x - sp2.x;

var r:Number;

if( dist < 0 )

{

if( dist < -90 )

{

r = _container.rotationY + _chageAngle * 4;

}

else if( dist < -70 && dist >= -90)

{

r = _container.rotationY + _chageAngle * 2;

}

else

{

r = _container.rotationY + _chageAngle;

}

}

else

{

if( dist > 90 )

{

r = _container.rotationY - _chageAngle * 4;

}

else if( dist > 70 && dist <= 90)

{

r = _container.rotationY - _chageAngle * 2;

}

else

{

r = _container.rotationY - _chageAngle;

}

}

_isRotat = true;

addEventListener( Event.ENTER_FRAME, update );

Tween24.serial(

Tween24.tween( _container, .5, Ease24._2_QuadOut ).rotationY( r ),

Tween24.wait( .1 ),

Tween24.func( function():void{

_isRotat = false;

removeEventListener( Event.ENTER_FRAME, update );

})

).play();

}

}

と、こんな感じで今回はやってみました。

さて、今回の実験で Kinect からのジェスチャーも取得できるようになったぽいので…

次はこのジェスチャーを使って AR.Drone を操作できるようにしてみたいと思っています。